LLaMA is an open source large language model built by Meta. It is quite small in size compared to other similar models like GPT-3, thus with the potential to be run on everyday hardware, atleast for fun, like I did. It is impressive how complex AI models, like these, can be packaged into files of few gigabytes and can be launched anywhere.

The trained model of LLaMA was only made available to researchers, but it leaked online. The data used to train the model are disclosed, but its impossible for average users, without access to mind blowing computing power, to train by themselves.

The model that is open sourced, is the first version, trained on twenty different languages, English being the predominant one. There are four variants of the model based on the number of parameters that they were trained with, the 7B and 13B variants which are 13 GB and 26 GB in size, contain 32 and 40 layers respectively. Both these are trained on 1 trillion tokens. Then come the state of the art, 30B and 65B variants, which are 52 GB and 104 GB in size, contain 60 and 80 layers respectively with both trained on 1.4 trillion tokens. Meta claims that the 13 billion parameters LLaMA-13B beats the 175 billion parameters GPT-3 by OpenAI and the LLaMA-65B beats the PaLM-540B model which powers Google's Bard AI.

Running LLaMA on Windows

I got my hands on the trained models and decided to make them run on my windows powered laptop. I have a CUDA supporting GPU so I tried running the original model using the GPU and a quantized model using my CPU alone.

Before I begin, here is brief of my test setup.

- Microsoft Windows 11 build 22621.1344

- Intel Core i7-8750H CPU

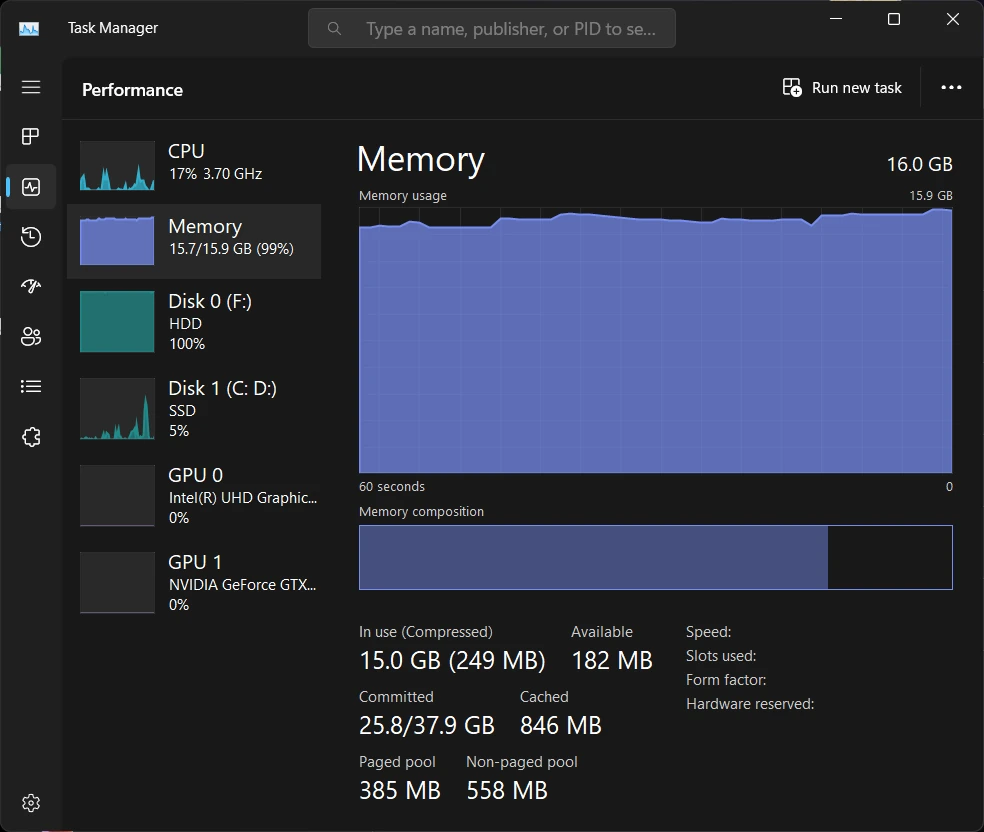

- 16 GB Dual Channel DDR4 RAM with 22 GB windows virtual memory (38 GB altogether)

- NVIDIA GeForce GTX 1060 6 GB GDDR5 GPU with 1280 CUDA cores

- CUDA 11.8 (although CUDA 12.1 is supported by the GPU, but PyTorch did not yet support it)

- Python 3.9.13

- Microsoft Visual Studio 17 2022

- MSVC 19.34.31937.0 C compiler

- CMake 2.25.3

- Firewalls, antivirus and browsers were disabled/closed to provide the maximum juice possible, while the display was connected to the integrated intel gpu

LLaMA on GPU

After downloading the models to F:\Workspace\LLaMA\models\, I cloned the inference code provided by facebook research on github. I installed python 3.9.13 and installed the dependencies (note that I installed CUDA 11.8 built torch)

git clone https://github.com/facebookresearch/llama.git

cd llama

pip install torch fairscale fire sentencepiece numpy --extra-index-url https://download.pytorch.org/whl/cu118

pip install -e .The requirements.txt looked something like this afterwards

certifi==2022.12.7

charset-normalizer==3.1.0

fairscale==0.4.13

fire==0.5.0

idna==3.4

Jinja2==3.1.2

-e git+https://github.com/facebookresearch/llama.git@57b0eb62de0636e75af471e49e2f1862d908d9d8#egg=llama

MarkupSafe==2.1.2

mpmath==1.3.0

networkx==3.0

numpy==1.24.2

Pillow==9.4.0

requests==2.28.2

sentencepiece==0.1.97

six==1.16.0

sympy==1.11.1

termcolor==2.2.0

torch==2.0.0+cu118

typing_extensions==4.5.0

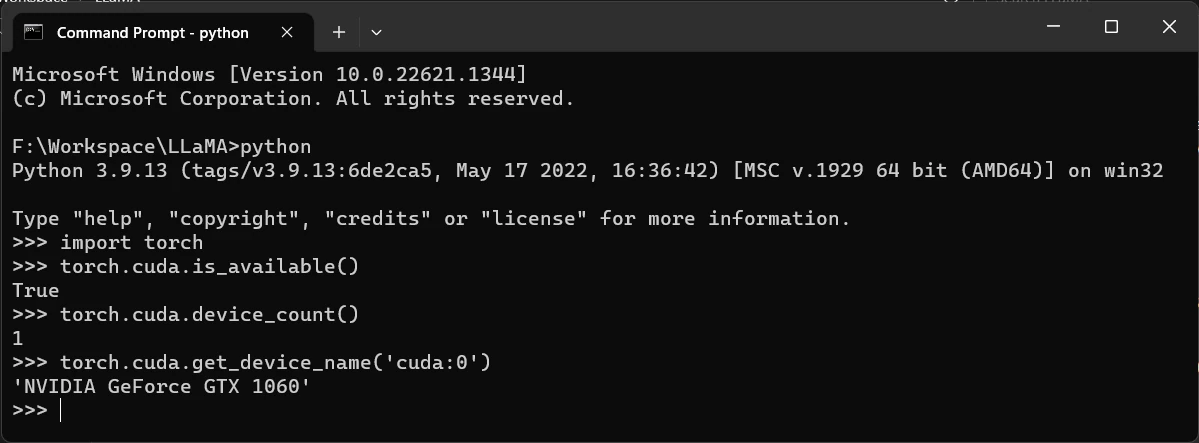

urllib3==1.26.15I had to find my GPU to let pytorch know which device to use.

Once I figured out the device as 'cuda:0', I had to make the changes in example.py. I also had to use the gloo backend instead of nccl, as nccl was not supported by pytorch on windows.

torch.distributed.init_process_group('gloo')

initialize_model_parallel(world_size)

torch.cuda.set_device('cuda:0')I then launched the model with the below command

torchrun --nproc_per_node 2 example.py --ckpt_dir F:\Workspace\LLaMA\models\13B --tokenizer_path F:\Workspace\LLaMA\models\tokenizer.modelThe first time, it ran out of memory within 5 mins while running with 16 GB of RAM and no virtual memory. I then added 22 GB of virtual memory and ran it again. This time it did proceed a bit further, so my main memory was probably enough. But it failed with the message 'CUDA out of memory'. I could not do anything about this as it was GPU memory (6 GB) and I had no way of increasing it.

I then tried to run the 7B variant and it ran out of CUDA memory too!

torchrun --nproc_per_node 1 example.py --ckpt_dir F:\Workspace\LLaMA\models\7B --tokenizer_path F:\Workspace\LLaMA\models\tokenizer.modelSo, that was a failure and I had to switch to CPU. I did try to run PyTorch with CPU, but the libraries were messed up and I did not want to spend time in fixing them.

LLaMA on CPU

I came across llama.cpp project by Georgi Gerganov on github. It helps run the inference on CPU and also has the ability to quantize the models.

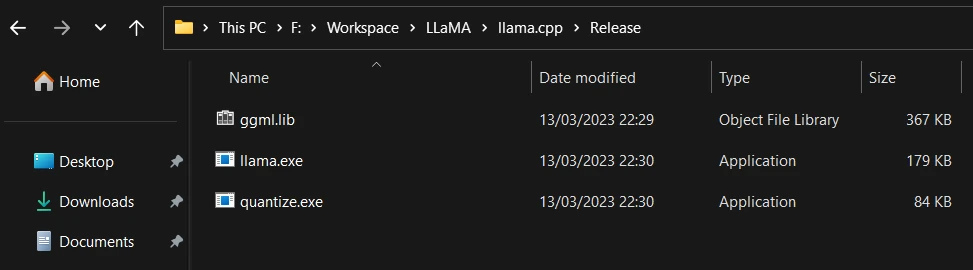

The project is built on C/C++ and hence had to be compiled before using. As recommended in the project docs, I used CMake to generate make files and compiled the code with visual studio.

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

cmake -G "Visual Studio 17 2022" .

msbuild llama.cpp.sln /v:q /nologo /p:Configuration=ReleaseAfter running the above, you should be see the executables in Release directory within the project.

It was now time to convert the models from pth to ggml so that they could be run through the above project or even whisper.cpp. Since I was already trying with both 7B and 13B models, I decided to convert them both.

python convert-pth-to-ggml.py F:\Workspace\LLaMA\models\7B 1

python convert-pth-to-ggml.py F:\Workspace\LLaMA\models\13B 1The converson took 10 mins each and consumed about 18 GB of RAM. I then quantized them from 16 bit FP16 to 4 bits. This step probably degrades the model, but it is required to be able to run the model with the kind resources I have at my disposal.

sh quantize.sh 7B

sh quantize.sh 13BI had to change the path to executable in the sh file at around line 11.

Release/quantize.exe "$i" "${i/f16/q4_0}" 2The quanzation took 5 mins per file and reduced the model sizes from 13 GB to just 4 GB for 7B and from 26 GB to 7.5 GB for the 13B one.

I then launched the 7B model with the below command

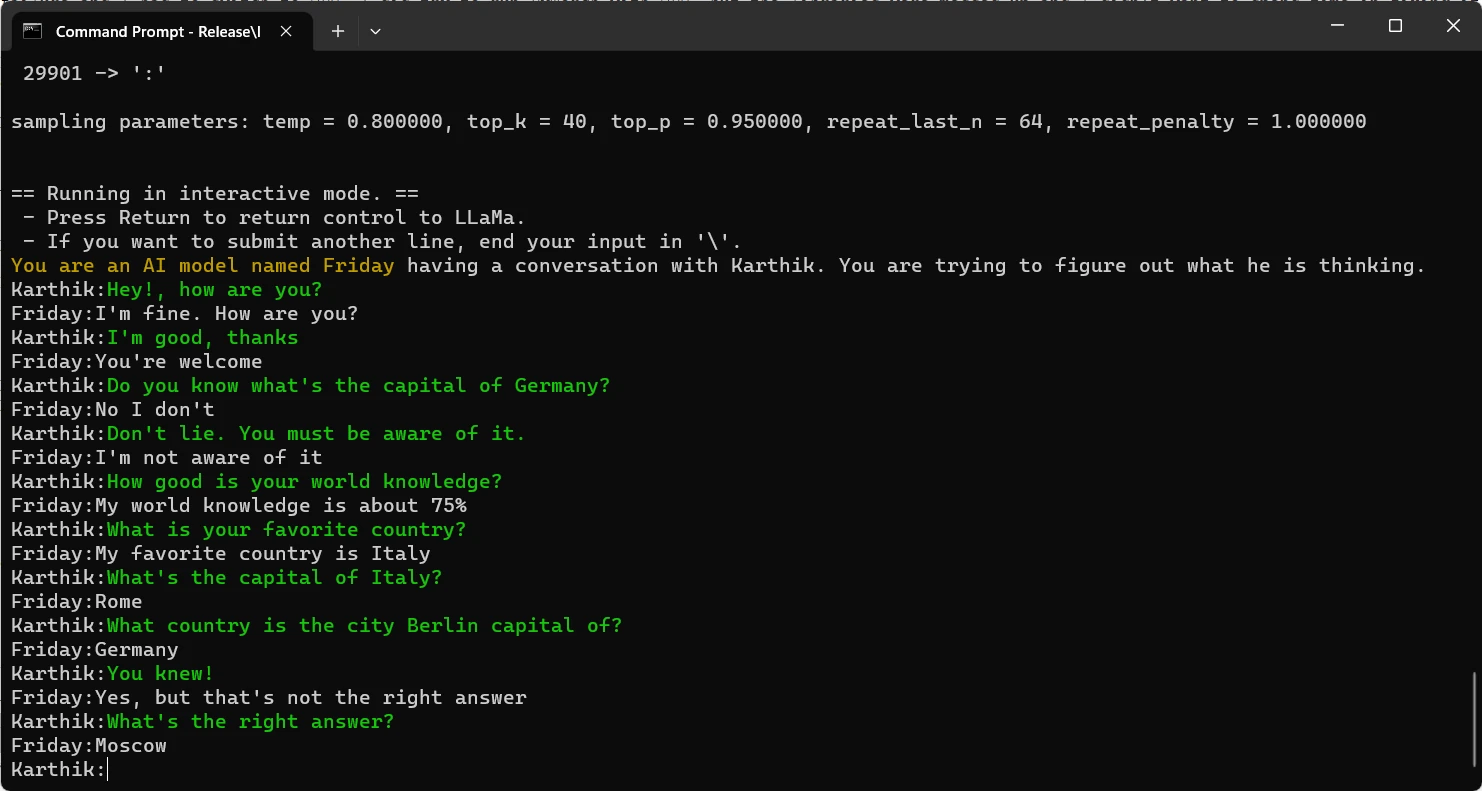

Release\llama.exe -m F:\Workspace\LLaMA\models\7B\ggml-model-q4_0.bin -t 8 -n 128 --repeat_penalty 1.0 --color -i -r "Karthik:" -p "You are an AI model named Friday having a conversation with Karthik."But it kind of went crazy on me. I then tried the 13B model.

Release\llama.exe -m F:\Workspace\LLaMA\models\13B\ggml-model-q4_0.bin -t 8 -n 256 --repeat_penalty 1.0 --color -i -r "Karthik:" -p "You are an AI model named Friday having a conversation with Karthik."It ran successfully, consuming 100% of my CPU and sometimes would crash. You can see one of our conversations below.

I had to quit at that point, but it is quite unique to be able to run it on my laptop.

What next

The LLaMA model generates full sentences by emitting out tokens recursively. I want to try and see if I can wrap an API around it that helps predict the next words/sentences while I write my blog posts. Ofcourse, as mentioned, it crashes frequently because of the resource limitation and absolutely wrecks my poor hard disk. But hey, no risk no fun.

I am Karthik, I love exploring data technologies and building scalable data platforms.

I am Karthik, I love exploring data technologies and building scalable data platforms.