We have seen how we can install Apache Spark and also how to code a basic word count program. Now it is time to dig deeper into the framework and understand how things work, while also going through certain jargons/terminologies associated with it.

Components of Apache Spark

Apache Spark has a number of componentes that provide various features. Below is a list with details about them.

- Spark Core

- The core component provides the execution engine of Spark. All other components are built on top of the core. This is the component where the in-memory computing capabilities of Spark come from, along with the support of RDD, I/O functions, scheduling, monitoring etc.

- Spark SQL

- This is one the most widely used optional component of Spark. The SQL component provides structured data processing capabilities through Dataframes and Datasets, while also providing interactive SQL shell for data analysts, data scientists, business intelligence folks to run queries on.

- SQL component directly runs Hive queries without any need of modification and thus enables Spark to be used as a querying engine as well.

- Spark Structured Streaming

- Spark SQL also provides the structured streaming capability, which is an alternative to Spark Streaming.

- Structured streaming offers exactly once ingestion with latencies of 100+ milliseconds and is suitable for simple use cases.

- Structured streaming provides high level DataFrame/Dataset APIs for easy processing and like spark streaming, is based on micro batching.

- Continuous Processing is available as an experimental feature.

- Spark Streaming

- The streaming component enables apache spark to process data streams in real time. Although it is called streaming, Spark actually micro batches the data into a stream of RDDs and each RDD is processed as a batch.

- The component readily integrates with many sources like Kafka, HDFS, twitter etc.

- Spark streaming gurantees atleast-once delivery and can be tuned to offer very low latency in the order of few milliseconds

- Spark streaming works on DStreams and does not provide DataFrame/Dataset APIs.

- Spark MLlib

- The MLlib component is specifically used to run many machine learning algorithms on spark, in a distributed way.

- Being able to run ML algorithms on big data sets, with relative easy and quick times, helps brands gain insights from data and make critical business decisions faster.

- GraphX

- The GraphX component designed to run graph algorithms at scale on spark clusters.

- It comes with many graph processing algorithms built in and thus enables to easily run these algorithms on huge datasets.

Concepts and Jargons

Before getting into technical details of how things work, lets go through a few concepts of apache spark. These are to be understood very clearly and forms the foundation for your spark journey.

- RDD [Resilient Distributed Datasets]

- RDD is an immutable collection of datasets, that is partitioned logically. Each of these partitions can be independently processed, thus providing parallelism.

- The resiliency/fault tolerance of an RDD comes from Lineage Graph (more information below)

- Even if a single partition of an RDD is lost, the entire RDD is recomputed using the Lineeage Graph.

- DataFrames

- DataFrames are structured data with named columns, similar to tables in a traditional relational databases.

- DataFrames can be considered as Dataset of "Row"s. Row is a generic untyped data structure.

- Datasets

- Datasets provide strong type-safety and can notify both syntax and analysis errors at compile time.

- Datasets can be generated by providing a case class to DataFrame.

- Both DataFrames and Datasets come from Spark SQL and thus offer optimizations through Catalyst.

- DStreams [Discretized Streams]

- DStreams are the abstractions, that Spark Streaming provides, over of continuous streams of RDD.

- Each RDD in a DStream is contains data of a certain time interval.

- Operations applied to DStreams are executed on all RDDs of the DStream.

- DAG [Directed Acylic Graph]

- Spark DAG is a graph structure where the vertices represent the RDD and the edges represent the operations to be performed on the RDD.

- The DAG can be visualized on the spark UI and shows the entire collections of transformations and actions in a spark application.

- Lineage Graph

- Lineage graph is a graph of an RDD, that show how to arrive at that RDD through it's parent RDDs.

- The lineage graph will not hold any information about the actions to be performed on an RDD, but holds only the information about transformations, required to arrive at that RDD.

- When partitions of an RDD or entire RDD are lost, spark uses lineage graph to rebuild that RDD from the source data or intermediate cached data.

- Partitions

- Partitions are subsets of data that are logically split to enable parallel processing.

- Shuffles

- Shuffles are movement of data from one node/executor to another, through the network.

- Shuffles occur when data of multiple partitions need to be brought together into a single node to perform some operation like aggregation operations.

- Shuffles are one of the worst enemies in spark. Each and every optimization of spark code first targets to reduce shuffles before moving to optimize other parts.

- Locality

- Hadoop was designed with the idea of taking the code/computation to the data, rather than bring the data to the code. This is Locality / Data Locality.

- Data sizes are always bigger compared to the code sizes and hence, it made a lot of sense to take your application to the computer where the data resides and run the code on that same machine.

- If the data resides on the cluster, spark also tries to process the partitions on the same node in which they reside.

- However, in the current environments, data is generally kept on cloud storage buckets and never reside on the cluster.

- Data Skewness

- Data skewness is when the partitions are imbalanced on size i.e., one or one set of partitions are considerably bigger in size compared to other partition/s.

- Data skewness generally occurs when the underlying source is a non-splittable file format (like a sequence file) or a wrong key is used to partition the data manually.

- Transformations

- Data transformation is the process of changing the data to required format/structure/value or even derive new data.

- Spark transformations are lazily evaluated, i.e., they are not computed unless an action is performed on them.

- When you apply a transformation operation on an RDD, you get another RDD as output

- Narrow Transformation

- A transformation that can work on every partition of an RDD, independently, without needing data from other partitions

- Examples : map(), filter()

- Wide Transformation

- A transformation that needs data of every partition to be available to perform its operation

- Every wide transformation causes a shuffle, thus creates a new stage

- Examples : reduceByKey(), groupByKey()

- Actions

- Actions in spark trigger the transformations to happen on RDDs and create the resultant output, which may or may not be an RDD.

- Examples : write(), count(), show(), reduce()

- Workers

- Workers are nodes in a cluster. They are used to relate to actual physical machines in the cluster.

- Executors

- Executors are containerized processes that run in the worker nodes.

- A worker, by default, will have only one executor. But can be configured to run more than one executor.

- Jobs

- Jobs are parallel computation units in spark. Each action performed in a spark application creates a job.

- A job can have multiple stages.

- Stages

- A stage in spark is a group of tasks that depend on another group of tasks (another stage) to be completed. i.e., only after all the tasks of stage 0 are completed, only then can stage 1 tasks begin.

- A stage is generally created as a result of wide transformations

- Tasks

- Tasks are serialized function objects sent to the executor. The executor deserializes and run them.

- Tasks are the smallest unit of work in spark.

- Each task, by default, will use 1 thread from an executor, but is configurable through spark.tasks.cpus to allocate more.

- Driver

- The driver program in spark is the entry point to the application.

- It is the class where your main() method resides and creates a SparkSession

- SparkSession

- SparkSession is a session object used to access spark services in your code.

- SparkSession is a collection of,

- SparkContext, used to access core API

- SQLContext, used to access spark SQL

- HiveContext, used to access Hive database

- StreamingContext, used to access spark Streaming

- Combining these contexts into SparkSession, since spark 2.x, has made it easier for developers with spark.

- Broadcasts

- Broadcasts in spark refer to sending data to all the worker nodes.

- It is usually used when joining a small table with a bigger table (Broadcast Join)

- It is also used to send any external data that is needed to perform operations on all partitions.

- Accumulators

- Accumulators in spark are data structures that are shared across executors and allow to aggregate metrics/data points from executors.

- Accumulators can only be created and read from drivers.

- Accumulators are write-only on executors

- Accumulators can be used only when the operation performed is commutative and associative.

- Examples: Addition operation, Multiplication operation, List generation

- Accumulators are not suited during speculative execution and may end up with inconsistent data

- Checkpoint

- Checkpointing refers to storing the state of RDD of a spark application to disk.

- Checkpointing helps in restarting a failed job without loss of data.

- The application starts, it reads the state file in the checkpoint directory and resumes from where it left off.

- Checkpoint is a feature from Spark Core

- Watermarking

- Watermark is a timestamp threshold for late data. If any data arrives with a timestamp later than the threshold, that data is dropped.

- In other terms, only data whose timestamp lies within the watermark are used for processsing.

- Speculative Execution

- Speculative execution in spark is the process of launching duplicate tasks to the already running tasks based on the runtime average and completion information of other tasks.

- Example: When a task is stuck, while most others have completed, or taking more time than other tasks to complete, if speculative execution is enabled, spark launches the same task in another node and waits for one of the two tasks to complete.

- Speculative execution is off by default.

- Catalyst Optimizer

- A query optimizer in spark sql

- Tungsten

- Project Tungsten is an umbrella term used to refer to multiple ways in which spark was made to use CPU and memory more efficiently.

- Read more about it on databricks blog.

- Kryo

- Kryo is a high performance serialization library in spark that allows fast serialization and deserialization.

- It uses less memory and provide more compact serialized files compared to java default serialization.

Architecture

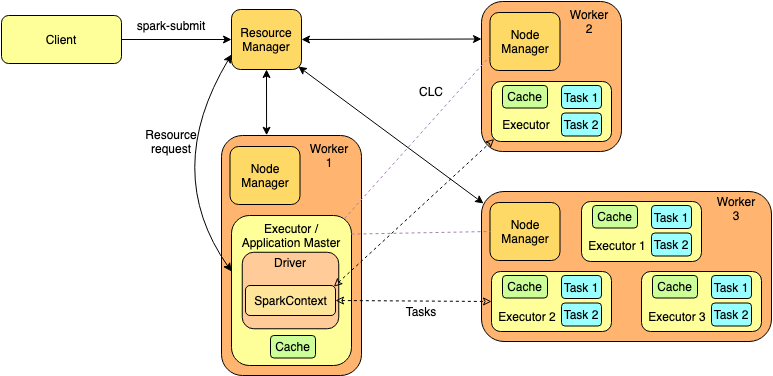

Spark applications run on distributed clusters and follow a master/slave architecture. Spark supports multiple cluster managers like Mesos, YARN and comes with one of its own. But production deployments generally use YARN (Yet Another Resource Negotiator), and this post will be based on such a setup.

When you submit a spark application using the spark-submit command, the spark-submit utility interacts with the resource manager of the cluster and creates a container in one of the nodes, called the Application Master.

The driver program runs within the application master and creates a SparkContext. The application master negotiates with the resource manager to get resources as requested by spark, along with locality preferences.

Once the resource manager identifies resources, it sends a lease with security tokens to the application master. (Resources here refer to being able to run containers in woker nodes, with the required CPU and memory)

The application master gains access to these resources by sending the lease to the individual node managers, asking them to create executor containers by passing a CLC (container launch context), which includes information about environment variables, the jar/python/other files etc.

The SparkContext will then, through TaskScheduler, creates and allocates the tasks to these executors as per the DAG generated.

The executors directly communicate with the driver and the driver keeps listening to executor heartbeats to know their statues.

When the tasks are completed, the SparkContext is closed and the driver exits. This causes the application master to terminate and thus releases the leased resources back to the cluster.

This is all you need to know about Apache Spark to get started into building amazing distributed applications.

I am Karthik, I love exploring data technologies and building scalable data platforms.

I am Karthik, I love exploring data technologies and building scalable data platforms.